Get started with LLM

Prerequisite

It would be easier if we have knowledge on NLP or Embedding, I would recommend Databricks course, the course start from the NLP knowledge till putting LLM to production.

What is LLM

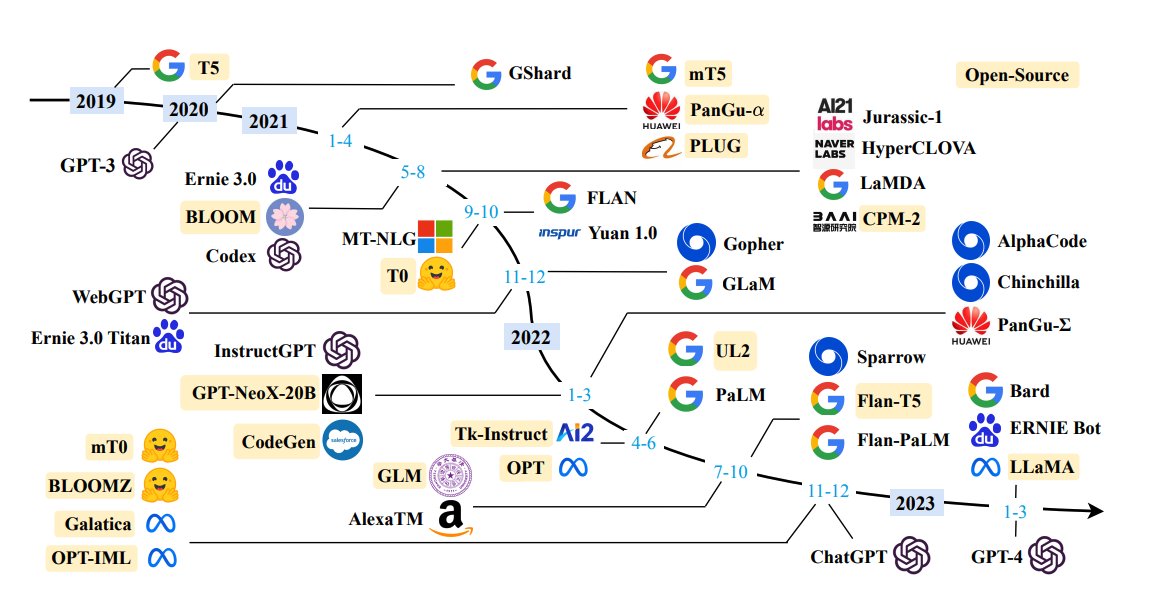

LLM stands for Large Language Model, which aim to predict the next words. There are long history of LLM from word2vec, LSTM, Transformer, BERT, ELMo, till the PaLM, GPT (Generative Pre-training Transformer), an interesting blog post explain how GPT3 works , and the Gemini, multimodal model from google

Any model lead to the same results, the only difference is dataset

The model itself trying to predict the probability distribution of the next word, after training for the very large dataset, and massive parameters, as a result we can use it to do most of the work. On the other hand, since it is a next word prediction, it can produce the hallucination result.

What we should know about it

We can read a good comprehensive blog post by Eugene - compile the patterns for building LLM-based system, and also great blog post by Chip - addressing the challenges in LLM research

To start using it

- The easiest way to start using is to use ChatGPT

- Then we start to learn different type of prompting technique

- Then we may would like to apply LLM with our own data, so I recommend start learning about RAG

- Then we may would like to create our own application, I would recommend start learning about LangChain or LlamaIndex

- At this point, I may recommend to start reading the below information, to understand the overview, approaches, and technique of LLM.

The first few courses, we would learn about LangChain from Deep Learning.AI, these courses are easy to follow, and we can start building our PoC right away.

- LangChain for LLM App Development: Link

- LangChain Chat with Your Data: Link

- Functions, tools, and Agents with LangChain: Link

- Bonus: if we have no experience with building PoC website using python, we can check out the streamlit or Gradio

Evaluations

As mention by Eugene, evaluation is crucial. Then we would need to learn how to evaluate our LLM or RAG.

- Quality and Safety for LLM Applications: Link

- Building and Evaluating Advanced RAG: Link

- Ragas

- WhyLogs

- Span Markers

- LLM Security

To maximizing LLM performance

I would recommend starting with youtube video from OpenAI, it will give us an overview of RAG and fine-tuning with the result.

Starting with prompting

- I would recommend learning this course ChatGPT Prompt Engineering for Developers: Link

- Component of Prompts

- Then we can explore different type of prompting in promptingguide.ai, which will summarise different prompting technique with the example such as zero-shot prompting, ReAct prompting

- There are also some challenges with the long text address in the Chip blog, and we can read more the detail Lost in the Middle: How Language Models Use Long Contexts, also with the new findings from Anthropic on their model Claude 2.1 which said, only adding 'Here is the most relevant sentence in the context:' yield the better result

Then if we focusing on the RAG, we read the follow.

- Retrieval augmented generation: Keeping LLMs relevant and current

- 10 Ways to Improve the Performance of Retrieval Augmented Generation Systems

- A Guide on 12 Tuning Strategies for Production-Ready RAG Applications

- Enterprise LLM Challenges and how to overcome them - Snorkel

WE can skip this for later - To go deeper on improving RAG, we can go deeper to understand how vector store works, and different retrieval algorithms, or strategies.

- We may start by learning the course by weaviate

- Then scan through different retrievers implemented by LLamaIndex

- Also start reading about vector store

- Finally, there are a different ANN embedding approach mention in Eugene blog also a good to read through (LSH, FAISS, HNSW, and ScaNN)

Then it's a good time to start exploring about different model, and how to fine-tune them

- To start with course from LAMINI on DeepLearning.ai: Fine-tuning Large Language Models

- then read a good summary from Eugene

- Then you will need to prepare your dataset

- Select the pre-trained models

- Update model architecture

- Pick a fine-tuning approach

- Training them

- A great courses by Databricks: Large Language Models: Foundation Models from the Ground Up

Put the model to production

Start by reading Emerging Architectures for LLM Application, this help us paint the big picture of the solutions we should apply in production, with additional resources to read from on different component. Or article How to Build Knowledge Assistant at Scale, written by QuantumBlack